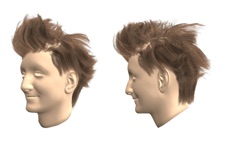

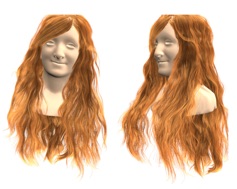

Single-View Hair Modeling Using A Hairstyle Database

Copyright © 2014 Hao Li

Human hair presents highly convoluted structures and spans an extraordinarily wide range of hairstyles, which is essential for the digitization of compelling virtual avatars but also one of the most challenging to create. Cutting-edge hair modeling techniques typically rely on expensive capture devices and significant manual labor. We introduce a novel data-driven framework that can digitize complete and highly complex 3D hairstyles from a single-view photograph. We first construct a large database of manually crafted hair models from several online repositories. Given a reference photo of the target hairstyle and a few user strokes as guidance, we automatically search for multiple best matching examples from the database and combine them consistently into a single hairstyle to form the large-scale structure of the hair model. We then synthesize the final hair strands by jointly optimizing for the projected 2D similarity to the reference photo, the physical plausibility of each strand, as well as the local orientation coherency between neighboring strands. We demonstrate the effectiveness and robustness of our method on a variety of hairstyles and challenging images, and compare our system with state-of-the-art hair modeling algorithms.

PAPER VIDEO