Multi-View Hair Capture Using Orientation Fields

Copyright © 2014 Hao Li

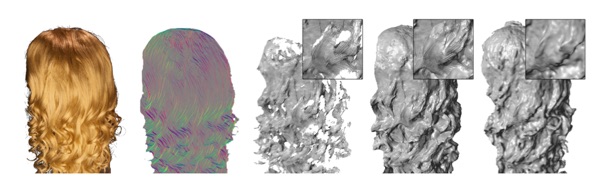

Reconstructing realistic 3D hair geometry is challenging due to omnipresent occlusions, complex discontinuities and specular appearance. To address these challenges, we propose a multi-view hair reconstruction algorithm based on orientation fields with structure-aware aggregation. Our key insight is that while hair’s color appearance is view- dependent, the response to oriented filters that captures the local hair orientation is more stable. We apply the structure-aware aggregation to the MRF matching energy to enforce the structural continuities implied from the local hair orientations. Multiple depth maps from the MRF optimization are then fused into a globally consistent hair geometry with a template refinement procedure. Compared to the state-of-the-art color-based methods, our method faith- fully reconstructs detailed hair structures. We demonstrate the results for a number of hair styles, ranging from straight to curly, and show that our framework is suitable for cap- turing hair in motion.

PAPER VIDEO

input

photograph

orientation

field

depth

map

final merged

result

PMVS+

Poisson

capture setup